Fuhad Abdulla

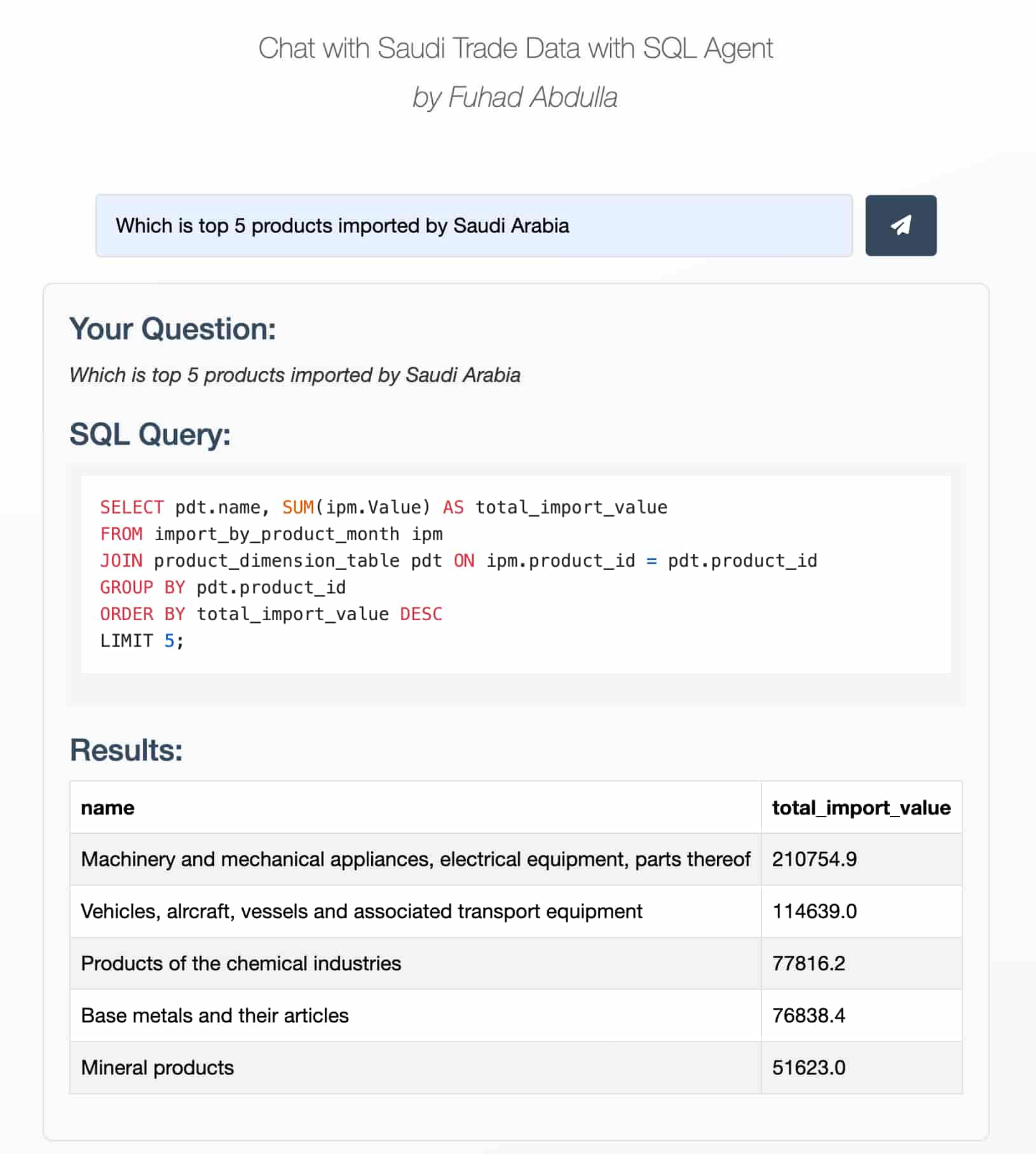

Can we chat with SQL databases using Natural Language instead of writing complex queries? 🤖💬

🚀 Tech Stack:

- 🗄️ Database: SQLite

- 🖥️ Interface: Dash (to integrate with the Saudi International Trade Analysis Project)

- 🐍 Programming Language: Python

- 🧠 LLM: OpenAI (model gpt-4o)

- 📊 Data: The Saudi International Trade data was originally in an Excel file, which I converted to SQLite using Python for this project. 🇸🇦

As a Data Analyst, staying updated with the latest technology is crucial. 🔍📈

I recently came across a video where Uber leveraged Large Language Models (LLMs) to generate SQL queries. This approach significantly reduced query creation time—from 10 minutes using traditional methods to just 3 minutes with AI. 🚗💨

Inspired by this, I wanted to build a smaller-scale version of their system.

How Uber Implemented It 👨💻

Uber used multiple specialized LLM agents, each trained for specific tasks, such as:

- 🗂️ Selecting the appropriate table

- 🧑💻 Constructing the SQL query

- ✅ Validating the output

With a similar approach, we can achieve 70-80% accuracy using two primary methods:

1. Table Schema Method 🏗️

This method involves passing the database schema to an LLM, such as OpenAI’s models, open-source LLMs on Groq, or locally hosted models via Ollama.

My Attempts:

- 🐍 Attempt 1: OpenAI Python Library

The LLM-generated queries didn’t make sense because the model lacked schema awareness. - 📝 Attempt 2: System Prompt with Schema and OpenAI Python Library

I created asystem_prompt.txtfile containing:- 📜 Table schema details

- 🧠 Table descriptions and when to use them

- 🔗 Instructions on which tables to JOIN

Result: The LLM started generating meaningful queries with accurate results! ✅

2. Retrieval-Augmented Generation (RAG) Method 📚

In this approach, a vector database stores previously successful queries, allowing the LLM to retrieve and adapt them for new queries. This improves accuracy as more data is added.

Why RAG is Better for Production:

- 📈 The more past queries stored, the more accurate the AI becomes

- ⚙️ Several tools, like Vanna AI, provide this functionality out of the box.

In Vanna AI, we can input documentation about the business, instructions for the AI, and it gets better and better as we use it! 📊🤖

If Security & Privacy Are a Concern 🔒

- 🖥️ Use local LLMs like Ollama or Mistral.

- 🗄️ Store embeddings in a local vector database.

- 🚫 Implement query validation (e.g., prevent DROP TABLE or other dangerous SQL injections).

- 🛡️ Restrict execution to read-only databases for safety.

Cost & Performance Considerations 💸⚡

Using OpenAI’s API or similar services can be expensive and slow, especially for large business databases with many tables. Instead, local or open-source LLMs running on a GPU offer a faster, cost-effective alternative. 💰🖥️